The MMPUG project focuses on developing autonomous systems for rapid mapping and exploration in large, unknown, and often hazardous environments. Our goal is to assist a human operator by reducing their workload and enabling effective coordination of multi-robot teams in time-sensitive scenarios like search and rescue.

Our Design Philosophy

Our architecture is built on crucial lessons learned from real-world deployments, including our team’s participation in the DARPA Subterranean (SubT) Challenge. These principles guide every aspect of our system design:

- Operator-Adjustable Autonomy: The operator must be able to flexibly adjust the robot’s level of autonomy to adapt to changing situations.

- System Interoperability: Seamless integration between different types of robots (e.g., wheeled and legged) is essential for dynamic deployment.

- Centralized Control Flow: Careful management of commands is necessary to prevent conflicts and ensure coordinated behavior across the team.

- Adaptive User Interface: The operator’s interface should dynamically adapt to the current context, presenting only valid actions to reduce cognitive load.

- Extensible System Design: The architecture must be modular to allow for the rapid development and integration of new capabilities.

Key Architectural Features

Our system is designed from the ground up to embody these lessons, resulting in a powerful and flexible platform for multi-robot operations.

Hierarchical Control & Sliding-Mode Autonomy

At the core of our system is the principle of "sliding-mode autonomy," giving the operator the flexibility to blend human intuition with machine precision. This is managed by a Behavior Tree on each robot, allowing the operator to seamlessly switch between four distinct levels of control:

- Full Manual: Direct teleoperation where the operator has complete, low-level control over the robot's movements.

- Smart Joystick: An assisted driving mode where the operator provides a general direction, and the robot's onboard planner intelligently navigates around obstacles.

- Waypoint Mode: The operator assigns a specific goal on the map, and the robot autonomously plans and executes the entire path to get there, as shown in the video.

- Exploration Mode: The highest level of autonomy, where the robot is tasked to explore an unknown area and makes its own decisions to efficiently map the environment.

A high-speed UGV navigating a complex corridor and obstacle course using Waypoint mode.

Advanced SLAM for Challenging Environments

Standard mapping algorithms often fail in visually repetitive or sparse environments. We have developed custom SLAM (Simultaneous Localization and Mapping) capabilities designed to be robust in difficult scenarios, such as long, feature-poor hallways and large, multi-floor structures. Our system maintains accurate localization and creates consistent, globally-aligned maps where other methods would accumulate significant drift.

Demonstration of our robust SLAM performance in a long, feature-poor corridor.

Coordinated Behaviors: Dynamic Convoy Formation

The modular design simplifies the development of complex multi-agent behaviors. In convoy mode, an operator controls only the lead vehicle. Follower robots use a dedicated 'convoy coordinator' module to autonomously maintain formation. Our system is designed for flexibility, allowing new robots to be seamlessly added to the convoy on the fly, without interrupting the mission.

Robots forming up and maintaining a convoy, with new members joining dynamically.

Coordinated Behaviors: Communication-Aware Peel-Off

Operating in communication-degraded environments is a major challenge. Our convoy can perform a strategic "peel-off" maneuver, where trailing robots are commanded to autonomously stop and act as static communication relays. This intelligently extends the network range, ensuring the lead robots stay connected to the base station as they push deeper into an unknown area.

A follower robot peeling off from the convoy to become a comms relay.

Advanced Vertical Mobility & Staircase Navigation

Navigating between floors is a critical capability for exploring complex urban and subterranean structures. Our system features a comprehensive suite of tools for detecting and traversing staircases using a heterogeneous team.

Long-Range Traversal

Our legged Spot robot is capable of autonomously navigating long and challenging staircases, including multiple flights in a single traversal, enabling rapid access to upper and lower floors.

Spot traversing multiple continuous flights of stairs while mapping and estimating the staircases.

Heterogeneous Map Merging & Tasking

Our system enables true robot collaboration. A high-speed wheeled robot can first discover and map a staircase on the ground floor. This staircase location is then automatically shared across the network, allowing a legged Spot robot to be tasked with autonomously navigating to that precise location to begin exploration of the next level.

A wheeled robot detects a staircase, and a Spot robot uses this shared data to navigate to it.

Single-Command Multi-Floor Exploration

The ultimate goal is to reduce operator workload. With our system, an operator can issue a single high-level command to get to a specific floor. The robot then autonomously plans based on all the detected staircases and traverses them without further human intervention.

A Spot robot autonomously navigating a multi-floor building from a single operator command.

Adaptive Operator Interface

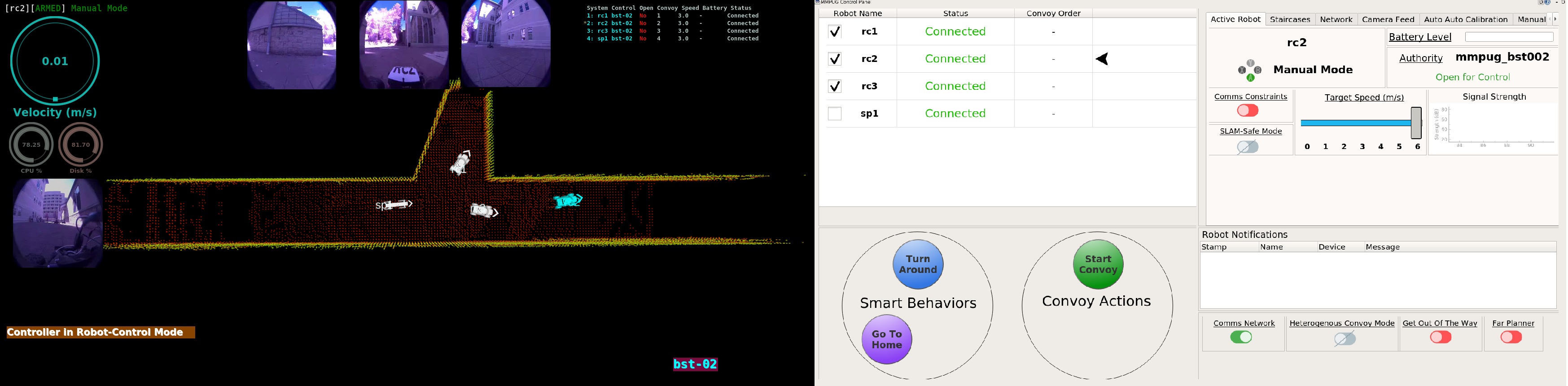

To minimize operator cognitive load, our interface is split into two main displays: an RViz window for 3D situational awareness (maps, camera feeds) and a custom touch-enabled GUI for robot commanding and feedback. The GUI is adaptive; based on feedback from the robot's behavior tree, it dynamically updates to present only valid actions to the operator. For example, the 'Start Convoy' action only becomes available when multiple robots are selected, preventing errors and simplifying control.